the problem is AIPRM stops writing and will not restart where left off. i try everything saying “please continue after…” and nothing. I click the button “continue” and he goes all about jibber jabber stuff on writing.

my question is how do I tell AIPRM to continue writing where he left off?

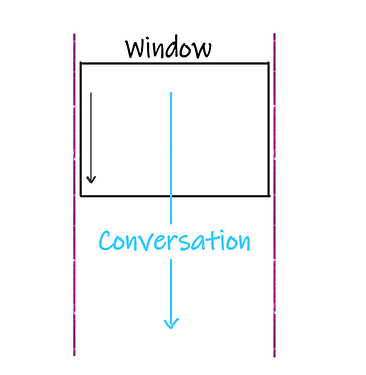

Imagine ChatGPT or any other existing LLM as it has an eye, that eye can only see and understand the context of your conversation/text through a Window, that Window has a limited length (which is 4000 words for ChatGPT-3.5)

If you started a new conversation with ChatGPT, the Window is at the start of your conversation, when you continue to chit-chat with it, and as the conversation gets longer the Window moves down…

at a certain conversation length, ChatGPT doesn’t recognize the context about the conversation start,

and So on…

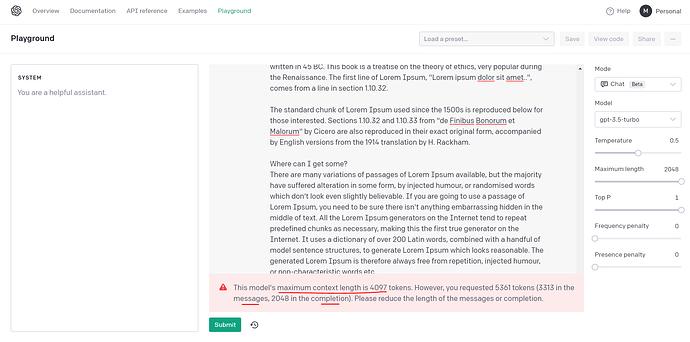

That Window length includes your new message, and the ChatGPT upcoming answer:

So, Whatever you prompt ChatGPT with most advanced prompts, it will not remember ![]() .

.

thanks this is really interesting. then how can i make sure AIPRM get to the end of what i ask him to?

AIPRM isn’t the problem. What you are dealing with is the built-in limitations that OpenAI put onto ChatGPT by design.

It takes a lot of processing power to run an AI. It takes even more to actually train one, but even running one that is pre-trained (the P in GPT) takes a lot of memory and processing cores. And that’s just for one user. ChatGPT was built to be available to anyone and everyone - millions of users. It is a mass-market product, built for chatting with.

It was never designed to write books. It was never designed to write a thesis or even a term paper. It was built for a task they put into the very name - Chat. And even there, it has limitations. ChatGPT as it first came out had a memory of roughly 4,000 tokens (about 3,000 words). GPT4 that came along later has a significantly higher token limitation (though it still has one), although it also has a finer-grained language model, meaning it may have more tokens from a set number of words.

Think of it a bit like picture resolutions. Two screens might be the same size, but what makes the difference is the resolution, the number of pixels on those screens, allowing finer detail with more pixels. Just like pixels, the number of tokens taken from the training corpus affect the definition and fine-grain of the understanding.

Another reason for limiting the chat memory was very simply to prevent misuse and abuse. It severely limits the chances of anyone training the AI over the course of a conversation to produce unwanted outputs, such as racism, abuse, etc. You can’t lead an AI too far astray if it can only remember 3,000 words before falling back to its original state.