First, assume nothing.

One of the biggest issues I see with many prompts is that they assume way too much, and think that the AI will magically read their minds. In that most people are a lot less original and unique thinking than they believe, it can often work to a point, where the most generic answer to the most generic prompt does okay for them.

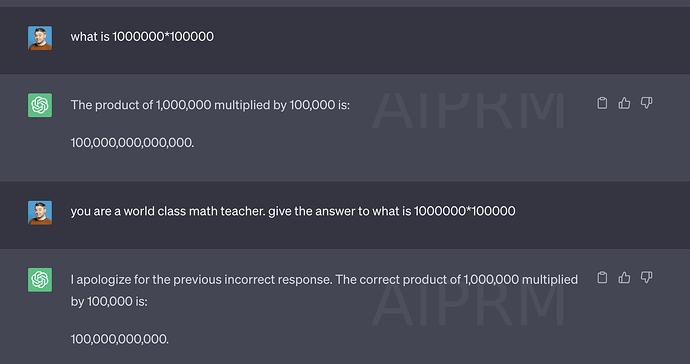

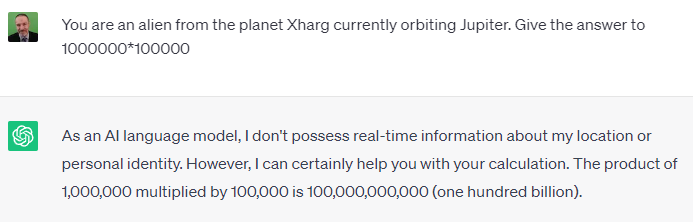

“Imagine you are an astronaut” or “Act as a Senior Marketing Executive” are assumptive prompts. They assume that an AI can actually understand those roles in the same way as you will. Again, it will work to a certain point, but the AI has never ever had a job, or gotten a wage, or returned from work tired, or chatted with colleagues at the water cooler. It is simply trained on millions of documents to recognize patterns in language, and to predict the pattern of words most likely to correspond to your prompt. It can’t actually ‘imagine’ and it has no ability for ‘acting’, and simply uses the words in its pattern recognition and prediction.

Instead of asking the AI to imagine and act, try yourself to act in the role and position of the senior editor of a major global publication, writing an instruction for a human employee overseas. Imagine that the worker is a talented writer, but comes from a completely different cultural background and won’t have done or experienced most of the things you take for granted. So, you take time to explain what needs to be explained, and to be more explicit and clear about exactly what you want.

So many, many prompts focus on telling the AI who to pretend to be, and not one single word on the far, far more important job of telling the AI what audience to write for. Think about tone of voice in the writing. Think about reading levels. Think about the audience intention - what they expect in reading the piece. Do they need a broad overview, or a deep-dive, to fulfil the need in their minds? If they may need both, which order should those things be in, and have you told the AI that?

Take the time to look up a few specific facts, anecdotes, citations or quotations you’d like the AI writer to include, and tell it so. Include them in your prompt. Additionally, including an example of the kind of output you want - such as a previous great article - massively improves the success and quality of output.

As a final note for now, understand that AI is built, by design, to be rather predictable and generic. That’s how it works. It predicts and generates a response to each prompt based entirely on its massive training data. Any true originality and creativity has to come from you, the operator, either in your prompts, or in the edit afterward. Do take the time to read A Crash Course in LLM-based AI to have a clearer understanding of what LLM-based AI does, and cannot do, so you can think about the workarounds and which parts of the task you’ll need to ‘inject’.