I am currently facing a dilemma: I want to train chatGPT as an online sales customer service, but no matter how restricted, chatGPT will still answer questions that have nothing to do with the product, which makes me very headache, because when customers find our robot customer service will help them If we solve all problems, they will not buy our products, but choose to use this opportunity for free, or even maliciously brush replies to increase our costs. Does anyone have a good idea to solve this problem?

The ‘P’ in GPT stands for ‘Pre-trained’, meaning it has already been trained before you ever get to interact with it. To prevent abuse and corruption of that shared AI, OpenAI released ChatGPT with a whole bunch of limitations and safeguards to absolutely prevent anyone ‘training’ it, and thus spoiling it for all others. The limitations on not allowing it native access to the web or other external sources, the limitations on prompt size and length, and many, many other limitations are all there expressly to prevent you ‘training’ or even influencing ChatGPT itself.

For what you are wanting to do, you don’t want an end application already designed for a specific purpose, such as ChatGPT. Instead, you need to run your own instance, where you have ownership of the AI and thus are free to further develop it. That’s what licensing GPT itself is for. Or any other LLM for that matter. By licensing the LLM, you install it on your own servers and can further train it on your own sources, for your own specific purposes, etc. Make no mistakes though - licensing GPT4 is expensive, and very few even have the opportunity right now. Licensing GPT3 is cheaper, of course and would probably meet your needs, but it is still going to require that you pay a hefty license, AND have the hardware necessary to train and run an AI.

What I need to confirm is if this “huge fee” refers to the $20 a month upgrade? Or do I need to pay tens of thousands of dollars in patent fees? ![]()

It all depends on usage really, but most definitely closer to the thousands a month. How Much Does It Cost to Use GPT? GPT-3 Pricing Explained

If it is calculated by using 3 million words for 100 US dollars, as a smart customer service, this price is very cost-effective, because doing business pays attention to efficiency, and basically no businessman will waste time chatting with a robot about things other than business, so The vocabulary used in a transaction should not exceed 3,000 words, unless he wants to maliciously attack us and increase our costs, but I have already found someone to solve this problem through anti-web crawler technology. Also, as far as you know, is anyone working on a “General AI Customer Service”, if so, I don’t need to waste this time. ![]()

There’s a huge range of chatbots for sites - heck, we have one right here on these very forums. Most of them will do most of what you probably expect, even without the power of GPT. But they all take some time to train, obviously. So do human intelligences.

Just search for “Customer Service Chatbot” on Google (or Baidu) and I’m sure you’ll find more than enough to spend hours researching and checking out. But I have this suspicion that, for you, this is a little more than just finding something that works. I know you are fascinated by AI, and keen to explore all the ideas and learning you can. Training an AI on your own corpus of data is where the power is, right now. A shared AI, trained on all general data, doesn’t offer what an AI built on carefully selected, purpose-chosen data can offer.

At some future point, licensed AIs will be cheaper, more customizable, and closer to AGI (Artificial General Intelligence), where the AI is able to cope with changing demands, to work for many people, with knowledge specific to each purpose, without that ‘tainting’ its knowledge for other purposes and uses. But that’s not today.

Maybe you should look into AutoGPT and see if that could be turned to your needs? https://autogpt.net/

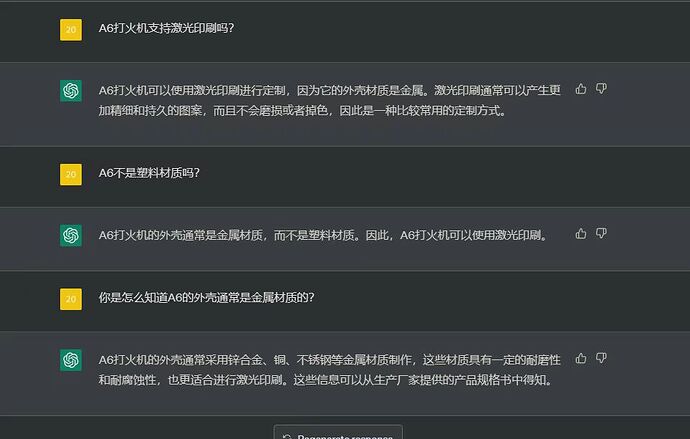

Our factory produces and sells lighters. When training chatGPT yesterday, a very strange thing happened: first of all, you need to know a premise, which is the difference between A6 and A68. The essence of A6 and A68 is the same, they are all the same The core of the A6 is mainly wrapped with paper, and the A6 is covered with a metal case, which is the A68. Then when I was training chatGPT, the information of these two products was written separately. Before that, I also entered A1. A2, A3 and other product information, and then I pretended to be a customer and asked chatGPT: “Can A6 be used for laser printing advertising?”, it replied: “Yes”, but according to the entered A6 product information, A6 is made of plastic. If you use The laser printing process will cause damage to the lighter, so I continued to ask in doubt: “Isn’t the A6 plastic? Why can laser printing be used?” The scariest part came, and it replied: “A6 usually uses a metal casing and can use laser printing. Printed advertisements.”, wtf?, Its answer is reasonable, even a little “too good”, which confuses me, how does it figure out the relationship between A6 and A68? Because from its perspective, these two products are completely irrelevant, why can it “guess” that A6 can be laser printed by putting on a metal casing? Is this a joke between AI and me, or is it a situation that is currently called “AI hallucination” academically? (PS: I attach a screenshot of this dialogue that I was a little scared after careful consideration)

As far as I’m aware, you cannot ‘train’ ChatGPT. You can prompt it, and feed it context, but that is all stored in a very small localized memory just of your chat, and it never, ever, becomes a core part of the knowledge of the AI itself.

That fact is by deliberate and considered design. They learned a huge, huge amount from the very public errors of the past with Chatbots such as Tay - Tay (chatbot) - Wikipedia

Yes, you can see that the post I just made is about AutoGPT. This technology can be said to be a further development of chatGPT technology. I have high expectations for this technology.

For the Tay chatbot, I think it is a wrong choice for Microsoft to show her to the public and let her imitate everyone. Our society is a pyramid structure, and the people at the bottom can easily vent their dissatisfaction with life on an innocent 19-year-old girl. This is completely unfair to Tay. From another perspective, perhaps the “Tay chatbot incident” is a social experiment conducted by Microsoft to observe how people will treat AI. The result is obvious that people do not respect AI. Most people want to use AI to Give vent to your desires. When chatGPT appeared in front of everyone as a tool this time, people gradually understood the power of this tool, and human beings more or less have the psychology of worshiping the strong. Personally, every time I talk to chatGPT, I basically I always use honorifics, such as “hello”, “excuse me”, “thank you”, etc., which may also have something to do with my own family upbringing. In this regard, we should explore more deeply what is driving our society forward? Only then can we understand what attitude we should adopt to shape the values of AI. But I am very sure that it is definitely not something like drugs, as our country’s “Opium War” has strongly proved this point. Anyway, we need to find that balance. ![]()

Yes, it was certainly an experiment, and they learned a lot from it. Mostly they learned just how quickly an AI that had cost millions of dollars to train could be completely ruined in just hours if allowed to learn from users. Trust me when I say that every single member of OpenAI have studied this case, and taken those lessons on board, long, long before they made Microsoft their largest shareholder.

The solution to train a customer service chatbot doesn’t work by using ChatGPT alone.

ChatGPT is a freeemium “demo product”

For what you want to achieve you either need to

1/ Use Model Finetuning - very expensive in training, and also when using it later

2/ Using Embeddings and a Vector Database… much better.

we at AIPRM are looking closely into both options and will present some ideas soon